🔎 AI Gets Personal, Leaked Shopify Memo, Resurrecting Extinct Species

What I'm tracking: AI's Leap, Workplace Shifts, and De-Extinction

What happens when AI knows you better than you know yourself?

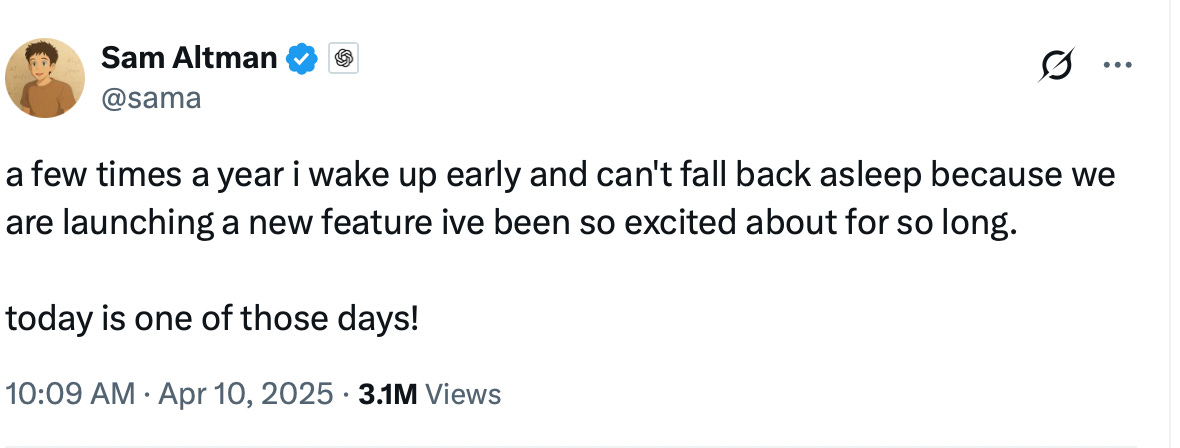

Last Thursday, OpenAI rolled out a feature that CEO Sam Altman was extremely excited about: long-term memory for ChatGPT.

This means the AI can now reference all your past conversations even those not explicitly saved, to provide a personalized, context aware experience for you. This feature is a potential game-changer, heating up the competition between AI companies to become your go-to AI assistant of choice.

Here's why:

Think about how valuable having a personal assistant is. But imagine you had to hire a completely new personal assistant every single morning. Each day, you'd waste valuable time explaining your preferences, routines, key contacts, even simple things like how you take your coffee. Now, contrast that with an assistant who works alongside you for years, maybe decades. They learn your rhythms, anticipate your needs, and act proactively: seeing a wedding on your calendar, knowing you hate shopping, and handling the dress and shoe order seamlessly.

Or a more personal scenario. Say 12 months ago, feeling uninspired at work, you brainstormed with your ChatGPT about a dream job – perhaps leading marketing at a startup. You put the idea aside. Fast forward a year: you're at a party, exchange contacts. Later, your AI flags one contact: she runs a VC firm investing in early-stage startups. You might have forgotten your dreams, but your AI hadn't. It connected the dots.

Memory is likely to become a critical factor in the AI competition. Deep personalization creates powerful network effects and user lock-in. If your current assistant knows you intimately, retraining a new one means starting from scratch, making it less desirable for you to switch. It's like moving from the Apple ecosystem to Android, but taken to a whole new level.

But there are some pretty big risks to consider. If a hacker gained access to this deep memory storage, imagine the trove of personal data exposed. Worse, consider the possibility of memory alteration. A malicious actor could subtly sway your opinions or insert biases by manipulating your AI's memory of past interactions, potentially influencing everything from voting patterns to healthcare choices. At scale, this capability could enable social engineering campaigns unlike anything we've seen

The AI that becomes our primary, memory-enabled assistant holds immense power, potentially shaping our perspectives as we grow alongside it for years to come. We need to approach this level of integration with our eyes wide open.

Leaked Shopify Memo: Using AI effectively is “now a fundamental expectation”

A leaked memo by Shopify's CEO made waves last week, highlighting directives like “hire an AI before you hire a human,” making AI use a core job expectation, adding AI skills to performance reviews, and calling AI agents "teammates."

My take? Evaluating whether AI can complete certain tasks before hiring humans will soon become standard practice across companies. But I'm curious what Shopify's AI training programs look like. Who will be in charge of evaluating employees’ AI skills at Shopify? Managers? Who might still be learning AI themselves? And how do you standardize this when AI's usefulness varies so much by job function?

Treating AI agents as "teammates"? Directionally correct, but mostly aspirational for now, given current AI limitations.

Still, the memo underscores that the AI workforce disruption has begun. Expect hiring slowdowns and skill shifts over mass layoffs in the near term as the initial impact.

What does this mean for us? We have to actively integrate AI into our daily workflows. (Responsibly, of course! Don’t share sensitive company data if your organization hasn’t explicitly authorized it). Even if your firm hasn’t rolled out a formal AI strategy, AI proficiency is rapidly becoming an implicit expectation.

The Era of De-Extinction: Resurrecting the Dire Wolf

Colossal Biosciences recently announced the birth of three pups claimed as the "de-extinction" of the ice-age dire wolf, a species extinct for roughly 10,000-12,000 years (though they made digital comebacks in shows like Game of Thrones). The reality, however, is more nuanced. Colossal didn't revive the species from a complete genome; instead, they genetically modified living gray wolf cells, making 20 edits based on ancient DNA snippets to express certain dire wolf traits. The gene-edited pups reportedly look healthy and roam a private US enclosure.

Why pursue such complex projects? The stated goal is often ecological: restoring lost biodiversity and potentially rebalancing habitats vital for climate stability. However, many challenges and ethical questions remain. Could a species extinct for millennia cope with our drastically changed modern world? Ecosystems have already adapted in their absence. And concerns inevitably arise about exploitation by black markets or zoos.

Putting aside the specific goal of de-extinction, the underlying scientific advancements are significant. The process refines techniques in ancient DNA analysis, complex multi-gene editing (like CRISPR), and advanced reproductive technologies. These are powerful tools already proving helpful in developing therapies for human genetic diseases and potentially boosting disease resistance.

As for visions of Jurassic Park? That remains firmly in the realm of fiction for now. Most estimates suggest DNA has a half-life of about 521 years, which means after about 6.8 million years, even traceable strands would be unreadable.

But my philosophy regarding technology: few things are truly impossible, we often just lack the adequate tools or understanding yet.

.

Super insightful read!

Feels like we’re entering an era where every job description quietly has a new first question "Why not AI?" 😂